final-project-stack

final-project-skeleton

* Team Name: Stack

* Team Members: Guanlin Li & Yudong Liu & Jiajun Chen

* Github Repository URL: https://github.com/upenn-embedded/final-project-stack

* Github Pages Website URL: https://upenn-embedded.github.io/final-project-stack/

* Description of hardware: esp32, ATmega328PB, Nano33 IoT

Final Project Report

1. Video

https://github.com/user-attachments/assets/94df1cdc-a7fc-4cc9-9b69-86574b1cf152

You could also find the video at ./videos/final_demo_lite.mp4

2. Images

3. Results

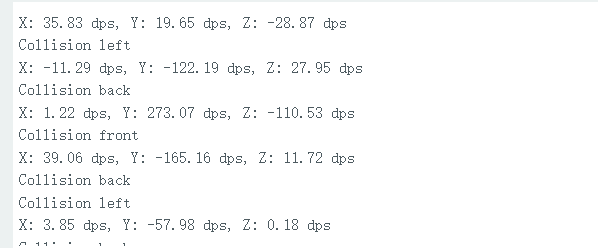

The team’s system design consists of two main components: a smart furniture module powered by an ATmega328PB microcontroller and a wand controller centered around the Nano 33 microcontroller, equipped with Wi-Fi signal transmission capabilities. The wand integrates a power supply system using three AA batteries to ensure stable power delivery to the Nano 33 and the WS2812b light strip. The Nano 33 leverages its onboard gyroscope and Inertial Measurement Unit (IMU) to capture real-time angular velocity and motion data, which form the basis for gesture recognition.

Figure 1: The Overview of Design Schematic

The motion data collected by the Nano 33 is aggregated and processed to train machine learning models aimed at accurately classifying three distinct wand gestures: circular, triangular, and linear motions. The resulting trained parameter matrix is then deployed directly onto the Nano 33, enabling on-device gesture recognition. When the user performs a gesture, the wand processes the motion data and encodes the recognized gesture into an 8-bit binary signal (e.g., 1 for a circle, 2 for a triangle, and 3 for a line). This signal is transmitted wirelessly via the Nano 33’s Wi-Fi module to an ESP32 device connected to the same local network.

Figure 2: The Flow Diagram Of the Adapted Program Design

The ESP32 is configured with a static IP address defined at the software level, ensuring reliable communication within the network. Upon receiving the gesture signal, the ESP32 forward it to the ATmega328PB microcontroller using the SPI communication protocol. The ATmega328PB then decodes the signal and executes the corresponding predefined actions, such as controlling lighting, activating a water pump, or driving a motor. This integrated system demonstrates a seamless interaction between the wand and the smart furniture, achieving the team’s vision of a gesture-based controller for smart home automation. Each subsystem is designed with modularity and scalability in mind, ensuring a robust and efficient implementation of the team’s concept.

3.1 Software Requirements Specification (SRS) Results

- Gesture recognition accuracy. Achieve at least 80% gesture accuracy, minimizing false positives/negatives. Importance: Ensures that users can reliably control home devices without frustration or repeated attempts.

- Test method:

We waved 100 times for different postures and records their recognized results. The graph below shows the simulation results:

Figure 10: The Simulation Results

The Summary Table:

| Posture | Total Test | Accuracy | Misrecognize Gesture 1 | Misrecognize Gesture 2 | Misrecognize Gesture 3 |

|---|---|---|---|---|---|

| 1 | 100 | 90% | 0 | 5 | 5 |

| 2 | 100 | 89% | 7 | 0 | 4 |

| 3 | 100 | 95% | 3 | 2 | 0 |

The Test result shows that we perfectly meet our requirement.

- Latency requirement. As we stated in the proposal, the latency of the wand should be lower than 200ms in order to achieve a seamless control.

- Test method: We waved 100 times for different postures and records their latency data.

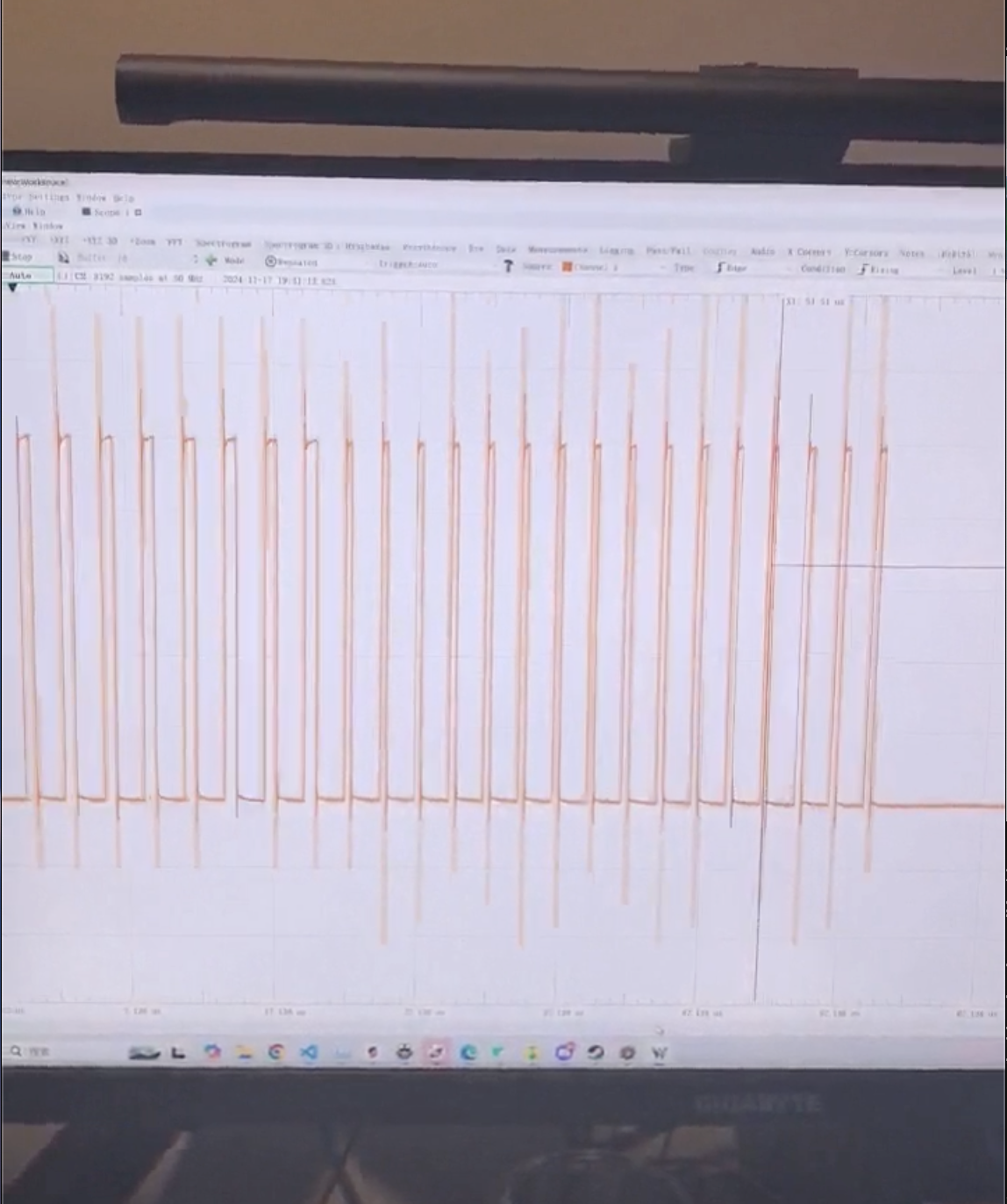

As the image shows, the average latency is below 200ms. Therefore, this target is achieved.

- User feedback. We stated in our proposal that we should ask people to try our device and provide feedback. This is unfortunately not completed due to the late complete date of this project. There’s no enough time for the team to look for people to test our device and provide feedback.

3.2 Hardware Requirements Specification (HRS) Results

Battery performance: At voltage = 5V, the current is around 60ma when the light is on. 3 normal Alkaline battery has around 2850 mAh at 4.5V. Based on this data, a rough estimate of the continue use time could be calculated, which is around $\frac{2850}{60} \approx 47.5$ hours. This exceed the target requirements of 4 hours. Therefore, the battery performance meets its requirement.

4. Conclusion

In this project, we delved deeply into various aspects of MCU development, exploring both familiar and unfamiliar platforms. The experience extended from foundational circuit-level programming on the ATmega328, progressing through advanced SPI communication on the ESP32, to managing interrupts on the Nano 33. By directly manipulating registers and developing drivers, we gained a profound understanding of these MCUs, allowing us to implement core functionalities effectively and seamlessly integrate them into our project. A notable achievement was the development of hardware drivers. For example, the motor driver required generating a 50Hz PWM signal with a variable duty cycle, which performed as expected. However, writing the driver for the LED light strip proved significantly more challenging. While an initial approach leveraging the SPI protocol was considered, the SPI signal generated by the ATmega328PB introduced gaps every 8 bits, necessitating a switch to the bit-banging method. This adaptive approach showcased our problem-solving capabilities and strengthened our understanding of low-level communication protocols. Throughout the project, we encountered and overcame difficulties related to integrating multiple components and systems, such as synchronizing the gesture recognition algorithm with hardware control mechanisms. The algorithm, which performed flawlessly, highlighted the synergy between software and hardware in achieving the project’s goals. Looking forward, a potential enhancement would involve integrating machine-learning-based algorithms onto the MCU to detect intentional gestures more accurately, further advancing the system’s functionality. Our project also emphasized the importance of teamwork and proactive planning. From defining functional goals at the outset to achieving them through close collaboration, we were able to complete the project as scheduled. This accomplishment reflected not only individual contributions but also the cohesive efforts of the entire team. The breadth of this project was another significant aspect, encompassing topics such as digital signal processing, serial communication protocols, PWM, interrupts, and wireless transmission. Tackling these diverse challenges required iterative problem-solving, adaptability, and a willingness to engage with new concepts. Ultimately, this integrative experience provided a comprehensive understanding of MCU development and its practical applications, leaving us with a sense of pride in our accomplishments and gratitude for the collaborative effort.

5. Some notes

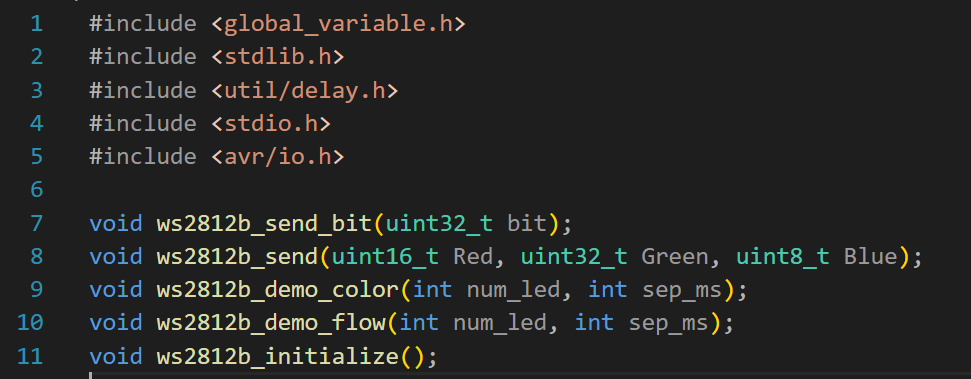

The implementation for the ATmega328PB is on pure bare-metal C and without external libraries. The interrupt on the Nano33 is also implemented without the use of external libraries. These should conclude at least 3 required topics. For the rest of the part which more or less uses some external libraries, please check the appendix below.

6. Appendix (Library)

Math.h

We used math.h for data preprocessing. The function we used included an absolute value function (abs) for measuring the change between two adjacent sensory data, sin and cos function for performing time-series data filtering and normalization, and square root function (sqrt) for calculating the standard deviation of a time-series sequence data for normalization. The above functions could all be implemented based upon bitwise operations of AND, OR, NOT, XOR and SHIFT.

Wifi.h:

we use this function to establish connection between nano33 and esp32. The Wifi.h library for the Arduino Nano 33 series provides an abstraction layer for interacting with Wi-Fi hardware. At bare-metal C level, the chip uses the u-blox NINA-W102, a Wi-Fi and Bluetooth combo module that communicates with the microcontroller over a Serial Peripheral Interface (SPI). The wifi is established through the above procedures on a lower level:

- The Wi-Fi library communicates directly with the u-blox module through SPI commands, such as configuring SPI pins (MISO, MOSI, SCK, CS) and setting up the SPI controller to communicate at the right baud rate. To prepare for data sending, it also needs to reset commands to the Wi-Fi module (via GPIO pins or SPI) to ensure the module starts in a clean state.

- After initializing the hardware interface, the module bootloader receives commands SPI commands from the microcontroller to configure the Wi-Fi stack, such as SSID and security type, as well as a connection status flag to indicate the connection status to the network

- The Wi-Fi stack for the u-blox NINA-W102 module is based on AT commands sent by Low-level SPI operations such as “spi_write(“AT+CWJAP="SSID","password"”);” via direct memory access. The SPI module uses interrupts to read and handle incoming data.

- After connecting to the network, it starts to use TCP/IP and sockets for sending the data through IP, port, and connection type. The module uses interrupts to handle incoming data or changes in Wi-Fi status. For example, SPI interrupts can notify the microcontroller when data has been received from the Wi-Fi module.

The library function we used include:

- Setting up wifi connection through WiFI.begin(SSID, Password)

- Connect to a device through its local-IP and port using WiFi.connect(IP, portNumber)

- Checking the status of wifi connection through WiFi.status(), it yield WL_CONNECTED status if a connection is established, and WL_DISCONNECTED if the connection is lost

- We use WiFiClient.println() in Arduino to send data to a ESP32 over a TCP/IP connection from Nano33

- We use WiFiClient.readString() to read data in ESP32 from Nano33 over TCP connection.

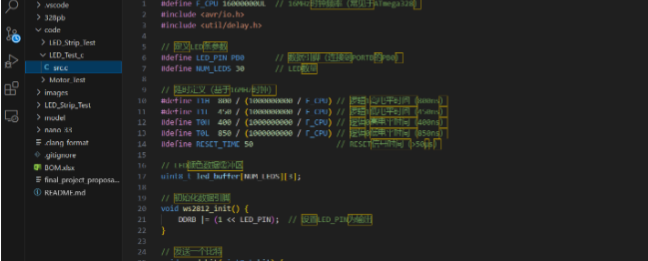

FastLED.h:

This library uses the bit-banging method to implement the driver for the ws2812b light strip. The basic method is the same as how I’ve implemented it on the ATmega328PB using bare metal C code.

SPI.h

In this project, the ESP32 acts as the SPI master, while the ATmega functions as the slave. We implemented the SPI controller on the ATmega328PB side using bare-metal C code and without any external library. We use SPI.h on the STM32 side to help us finish the project faster.

Data Transfer Mechanism

The spi.h library facilitates the following operations for data transfer:

- Data Sending: The ESP32 sends commands or data to the ATmega via the MOSI line.

- Data Receiving: The ESP32 receives data from the ATmega via the MISO line.

- Data Frame Structure:

- Communication often uses fixed-length data frames, typically consisting of:

- Command Byte: Indicates the operation type (e.g., read or write).

- Data Segment: Contains the actual data being transmitted.

- Checksum (optional): Ensures data integrity.

Synchronization Mechanism:

- The spi_transfer() function performs a complete send-and-receive operation in sync.

- The ATmega responds synchronously based on the SCLK signal.

Library Structure

The spi.h library provides a high-level interface for SPI communication and consists of the following components: (1) Configuration Functions These functions initialize SPI communication parameters, including: · SPI Mode: Defines the clock polarity (CPOL) and phase (CPHA). Common configurations are Mode 0 or Mode 1. · Clock Frequency: Determines the data transfer speed. · Bit Order: Specifies whether data is transmitted Most Significant Bit (MSB) first or Least Significant Bit (LSB) first.

Example:

spi_bus_config_t bus_config = {

.mosi_io_num = PIN_NUM_MOSI,

.miso_io_num = PIN_NUM_MISO,

.sclk_io_num = PIN_NUM_CLK,

.quadwp_io_num = -1,

.quadhd_io_num = -1

};

spi_bus_initialize(HSPI_HOST, &bus_config, 1);

(2) Data Transfer Functions

The key functions for data transfer include: · spi_transfer(): Performs a complete transaction, including sending and receiving data simultaneously. · spi_write() and spi_read(): Perform write-only or read-only operations, respectively.

spi_transaction_t trans = {

.length = 8 * sizeof(data),

.tx_buffer = data_to_send,

.rx_buffer = data_received

};

spi_device_transmit(spi_handle, &trans);

(3) Interrupts and Event Handling · The SPI driver on the ESP32 supports event callbacks, such as triggering an interrupt when a transfer completes. · Interrupts can be configured to synchronize communication with the ATmega.

Arduino.h

We only use the Serial class for debugging purposes, it could be removed without problems.

Presentation

1. Class topics we’ve covered:

1.1 SPI: It is used for data transmission between the ESP32 S2 and the Atmega328. The ESP32 receives commands from the Nano33 via Wi-Fi and transmits them to the Atmega328, enabling it to control the furniture. Two versions of the communication protocol were implemented on the ESP32: one in bare C and the other using the SPI.h library. All code on the Atmega328 was written in bare C.

1.2 Wireless communication:: Wi-Fi was utilized to enable wireless connection and data transmission between the ESP32 and Nano33. Both devices employed the WiFi.h library, but they were manually configured to connect within a local network with assigned IP addresses to complete the data reception logic.

1.3 Interrupts: In the Nano33, we utilized interrupts to enable the MCU to periodically receive data from the IMU and store it in a sequence. This approach ensured the formation of a temporally uniform dataset, unaffected by variations in computation time during each recognition process. This was achieved by directly accessing the Nano33 registers to configure the interrupt and set its frequency to 10 Hz.

1.4 Digital Data Processing: In the posture recognition module, we designed and implemented an algorithm tailored to our application needs. This algorithm analyzes a complete data sequence to determine the number of inflection points, which are then compared to identify the gesture’s shape and corresponding command. For certain complex mathematical operations, the math library was utilized.

1.5 Timer(PWM): We designed a system to adjust the duty cycle of the PWM signal, enabling the motor to rotate from 0 to 90 degrees to achieve the door-opening and closing functionality.

2. Input device

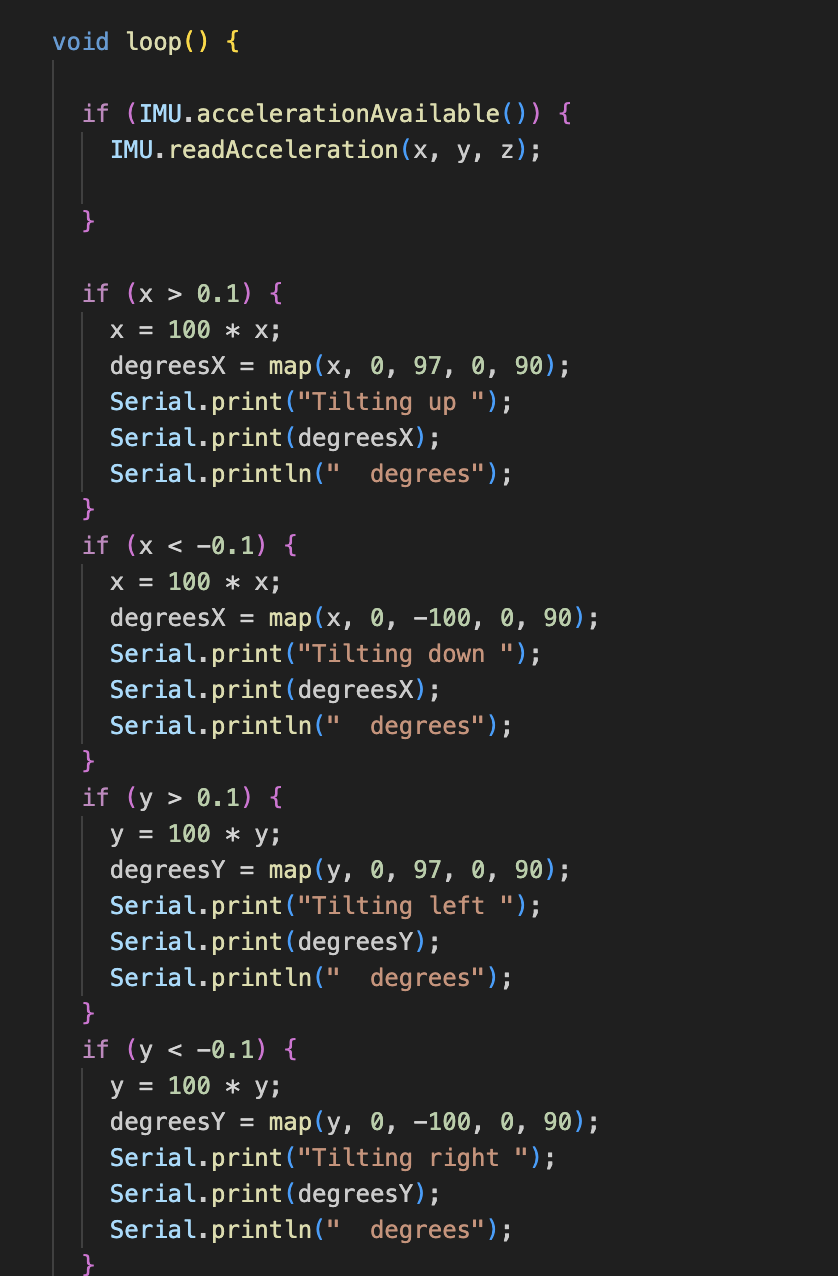

The IMU & Gyroscope sensor: Responsible for collecting angular velocity and acceleration data during the motion of the wand to facilitate data processing. This data is subsequently input into the Nano 33.

3. Output Device

Motor: Responsible for controlling the opening and closing of the door, which is regulated by a PWM signal.

LED light strip: They were applied to the smart home model and the wand separately. One was used to demonstrate the wand’s ability to control the status of lights, while the other was integrated into the wand to provide feedback for different commands, enhancing its visual appeal and interactivity.

Relay: Controlled by simple signals, it essentially functions as a switch to control the water pump.

4. Complexity (what was difficult in your project and on what things did you spend a large amount of time?)

4.1 Driver of the LED strip

The LED strip requires precise signals (0.4 micro seconds) in order to control the light. We tried to use SPI to control it at first, but the ATmega328PB outputs a gap (2 cycles long, pulling everything low) between two 8-bit SPI transmission, which is not what we want. Therefore, after some research, we went for the bit-banging method, which is to use assembly code to control the GPIO pin in order to reach the required frequency.

4.2 Adapting different Hardware Platforms

The development of the ESP32 and Nano33 was a critical aspect of the project. Notably, we worked on three different MCUs, each with unique and significant functionalities. For example, the ESP32 required configuring SPI in Master mode, while the Nano33 involved direct register access to set up a 10 Hz interrupt.

Both chips, being part of integrated modules, presented additional challenges. The ESP32 was part of the ESP FeatherS2 board, and the Nano33 included components like an IMU, resulting in highly complex GPIO mappings. The available documentation and resources typically relied on pre-built libraries, making bare-metal C development exceptionally challenging due to differing implementations across MCUs.

We conducted extensive research and referred to numerous resources and forums to understand how to achieve our desired functionality via direct register access. Ultimately, both parts were successfully implemented using bare-metal C, overcoming the inherent complexities and demonstrating a deep understanding of each MCU’s architecture.

4.3 Algorithm for recognizing Gestures

Recognizing gestures is a complicated task as there are no existing datasets and ready-to-use algorithms that’ll fit our use-case and hardware sensories. Developing a recognition algorithm also requires software hardware codesign since we need to make sure it could run properly on a platform with limited computational power. The difficulties come from three aspects, data collecting, data filtering (preprocessing) and computation.

The data for gesture recognition is sampled from the IMU and Gyroscope from the Nano33 IMU & Gyroscope module using built-in packages. To ensure consistent data reading from the gyro and IMU, we added interrupts to the Nano33. This allows us to read data every 100ms, avoiding irregular and inconsistent readings caused by varying processing times of the data matrix.

One of the issues we encountered was the race condition of reading and updating the gyroscope data, and using it for computation. We solved this issue using a cyclic queue with semaphores.

Each time a new data is sampled from the IMU and Gyroscope, we insert the new data into a cyclic queue with a maximum capacity of N elements. The purpose of the queue is to allow concurrent O(1) insertion and deletion of sensory data. During computation, the main thread keeps popping elements from the queue to detect possible sharp turns and shifts in IMU and gyroscope data, while the time interrupt is triggered once per 100ms to collect data from the register that stores IMU readings. Due to the asynchronous execution between the two sets of operations, we use semaphores to lock the queue during insertion, popping, element counting and copying. This ensures there’s no race conditions in the queue.

Another issue we need to handle is the loss of packets when collecting time-series data for computation (the new data overwrites the old data in the queue before they’re processed and popped away). In order to ensure consistency of our training data format and record unintended data losses due to processing latencies, label each data with a unique id that increments for each sample. Before we perform computation, we measure the gap of id value between two consecutive IMU data samples to make sure they’re off by 1. Otherwise, we clear the queue to restart data collection.

Due to the intense workload of implementing networks from scratch on the MCU and the huge latency introduced by its inference, we choose to use a simpler thresholding method for gesture recognition on denoised time-series IMU data. Each time we pop a new element from the queue, we compare it with the previous element for Euclidean distance. If the two IMU data vectors are close enough (within a certain threshold), we ignore the data and throw it away. Otherwise, we enter a data-collection mode and store the data into a vector, and exit the data-collection mode if the data becomes stationary for a period of time (a series of N data points have Euclidean distance within a certain threshold). After we finish collecting the data, we perform normalization on the data-series using their max and min values. We also perform principal component extraction for denoising the data series by implementing matrix computation from scratch. We measure the number of sharp turns in the denoised data series by comparing consecutive data vectors through Euclidean distance calculation. By analyzing the number of sharp turns we could classify the gesture into different shapes such as a line, triangle, square and star.

5. Integration (what things broke when you put them together and how did you deal with it?)

When connecting the ESP32 to the ATmega328PB using SPI, some package was sent by the ESP32 but not received by the ATmega328PB. After some time of research, we figured out that the frequency of the SPI clock is too high for the ATmega328PB to stably receive data. We solved this problem by lowering the frequency of the SPI clock.

We also encountered packet drops and connection issue when trying to connect Nano33 to the ESP32 via WiFi. We set up the WiFi separately and used a python script to test if it’s working properly. However, when we tried to connect the two devices together, they cannot communicate with each other. We figured out that the initialization of the two parts need to be adjust before they can connect together.

Another integration problem is when we try to burn the algorithm that decides what shape the user just waved to the Nano33. The code works fine when we compile it locally, but when trying to move it onto the board it gives several errors that we were managed to solve in around a week’s time.

MVP Demo

System Diagram Overview

The team’s system design consists of two main components: a smart furniture module powered by an ATmega328PB microcontroller and a wand controller centered around the Nano 33 microcontroller, equipped with Wi-Fi signal transmission capabilities. The wand integrates a power supply system using three AA batteries to ensure stable power delivery to the Nano 33 and the WS2812b light strip. The Nano 33 leverages its onboard gyroscope and Inertial Measurement Unit (IMU) to capture real-time angular velocity and motion data, which form the basis for gesture recognition.

Figure 1: The Overview of Design Schematic

The motion data collected by the Nano 33 is aggregated and processed to train machine learning models aimed at accurately classifying three distinct wand gestures: circular, triangular, and linear motions. The resulting trained parameter matrix is then deployed directly onto the Nano 33, enabling on-device gesture recognition. When the user performs a gesture, the wand processes the motion data and encodes the recognized gesture into an 8-bit binary signal (e.g., 1 for a circle, 2 for a triangle, and 3 for a line). This signal is transmitted wirelessly via the Nano 33’s Wi-Fi module to an ESP32 device connected to the same local network.

Figure 2: The Flow Diagram Of the Adapted Program Design

The ESP32 is configured with a static IP address defined at the software level, ensuring reliable communication within the network. Upon receiving the gesture signal, the ESP32 forward it to the ATmega328PB microcontroller using the SPI communication protocol. The ATmega328PB then decodes the signal and executes the corresponding predefined actions, such as controlling lighting, activating a water pump, or driving a motor. This integrated system demonstrates a seamless interaction between the wand and the smart furniture, achieving the team’s vision of a gesture-based controller for smart home automation. Each subsystem is designed with modularity and scalability in mind, ensuring a robust and efficient implementation of the team’s concept.

Home Model (ATmega328PB+ESP32)

The configuration of ATmega328PB is shown below:

Figure 3: The Wire Configuration of ATmega328PB

This block diagram illustrates the connection between the ATmega328PB microcontroller, the ESP32 module, and peripheral components. The ATmega328PB connects to peripherals as follows: PB0 handles LED, PB1 controls the motor (door), and PC0 interfaces with the pump, with Vdd providing 5v and GND providing the ground connection. The ATmega328PB communicates with the ESP32 using the SPI protocol, where PB2 connects to SS, PB3 to MOSI, PB4 to MISO and PB5 to SCK. The ESP32’s SPI pins are connected to SDI (GPIO 37), SDO (GPIO 35), SS (GPIO 33) and SCK (GPIO 36). Both the ATmega328PB and ESP32 share a common 5V power supply via Vdd and are grounded to the same GND pin. This configuration enables the ATmega328PB to control peripherals while communicating with the ESP32 for advanced processing or wireless functionality.

1. Home Model 3D Printing

The walls of our smart furniture model are created using laser cutting. The core part is designed to accommodate a servo motor, which controls the door’s opening and closing. We modeled it to ensure the servo motor can be properly installed.

Figure 4: The 3D Module of The Gate

2. Main Control

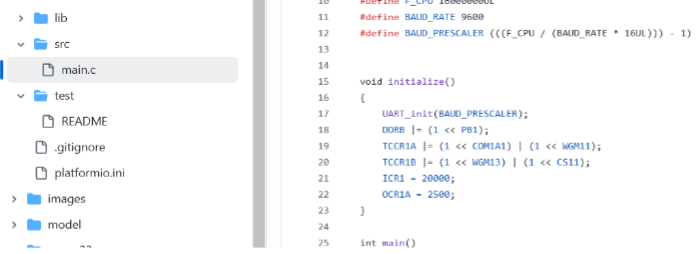

In Atmega328Pb, we should continuously check whether data sent from the ESP32 has been received. Upon reception, we use an if statement to evaluate its value and execute different operations accordingly. The core bare metal C are shown below:

while (1) {

if (data_received) {

if (data == 1) door_status ^= 1, motor_angle(door_status ? 90 : 0);

else if (data == 2) light_status ^= 1, ws2812b_send(light_status ? 255 : 0, 255, 255);

else if (data == 3) pump_status ^= 1, PUMP_PORT ^= (1 << PUMP_PIN); }}

We will excute different command based on the data transferred.

3. SPI

SPI (Serial Peripheral Interface) is a high-speed, full-duplex communication protocol commonly used for short-distance communication between microcontrollers and peripheral devices. It operates with a master-slave architecture, where the master device controls the clock signal (SCK), enabling synchronized data exchange. SPI uses four main lines: MOSI (Master Out Slave In), MISO (Master In Slave Out), SCK (Serial Clock), and SS (Slave Select). It is known for its simplicity, high data transfer rate, and flexibility, making it ideal for applications like sensor data acquisition, memory interfacing, and communication between processors.

In our project, it is used to transfer data from ESP32 to Atmega328PB to send instructions. To implement this communication protocol, we need to code Slave Mode in Atmega328pb and Master Mode in ESP32.

3.1 Slave (ATmega328PB)

The core codes are shown below to initialize Slave Mode:

#include <stdlib.h>

#include <avr/interrupt.h>

#include <stdio.h>

#include <avr/io.h>

uint8_t SPI_SlaveReceive();

void SPI_Slave_initialize()

{

SPI_DDR |= (1 << SPI_MISO);

SPCR0 |= (1 << SPE);

}

uint8_t SPI_SlaveReceive()

{

while(!(SPSR0 & (1<<SPIF)));

return SPDR0;

}

- SPI_DDR: Refers to the Data Direction Register for the SPI port, where individual bits control whether a pin is an input or output. By setting SPI_MISO as output, the slave can send data back to the master.

- SPCR0 (SPI Control Register): Contains control bits for SPI configuration. The SPE bit enables the SPI module.

- SPSR0 (SPI Status Register): The SPIF (SPI Interrupt Flag) bit in this register is set when a data transfer is complete. The function polls this bit to ensure data is ready to be read.

- SPDR0 (SPI Data Register): This register holds the data received from the SPI master. Reading from it retrieves the received byte.

Overall, we enabled SPI, configured it in Slave mode, and set the data transfer mode to the default Mode 0, which must match the configuration in the ESP.

3.2 Master (ESP32)

The SPI configuration process on the ESP32 is straightforward and similar to previous setups. It involves accessing the relevant registers, enabling SPI, setting it to Master mode, and using Mode 0 for the transfer mode to ensure proper communication. By default, the ESP32 supports four SPI buses, but currently, only HSPI and VSPI are available for use. However, due to the unique GPIO mapping of the ESP32 Feather S2, the pin assignments differ, as shown in the diagram below:

Figure 6: The Pin Layout Of ESP32 Feather S2

The core codes to implement are shown:

- Header Files and Macro Definition

#include "esp32/rom/gpio.h"

#include "soc/spi_struct.h"

#include "soc/gpio_sig_map.h"

#include "soc/io_mux_reg.h"

#include "driver/gpio.h"

These headers provide access to the ESP32’s hardware abstraction layer (HAL) and GPIO control, enabling direct manipulation of hardware registers.

-SPI Master Initialization Function

void spi_master_init() {

gpio_set_direction(VSPI_SCK, GPIO_MODE_OUTPUT); // Set SCK as output

gpio_set_direction(VSPI_MOSI, GPIO_MODE_OUTPUT); // Set MOSI as output

gpio_set_direction(VSPI_MISO, GPIO_MODE_INPUT); // Set MISO as input

gpio_set_direction(VSPI_CS, GPIO_MODE_OUTPUT); // Set CS as output

Configures the GPIO pins for SPI functionality. SCK, MOSI, and CS are outputs; MISO is input.

SPI3.user.val = 0;

SPI3.user.doutdin = 0; // Data is not bidirectional

SPI3.user.duplex = 0; // Full duplex mode disabled

SPI3.ctrl.val = 0;

SPI3.ctrl.wr_bit_order = 0; // Use MSB-first for writes

SPI3.ctrl.rd_bit_order = 0; // Use MSB-first for reads

Maps the GPIO pins to the corresponding SPI functions using the ESP32’s GPIO matrix. Then we Initialize the SPI3 user and control registers. Configures bit order and sets the transfer mode.

SPI3.clock.val = 0;

SPI3.clock.clkcnt_n = 7; // Clock divider setup

SPI3.clock.clkcnt_h = 3;

SPI3.clock.clkcnt_l = 7;

SPI3.cmd.usr = 0; // Ensure no ongoing SPI command

}

Configures the SPI clock, setting the frequency divider. Then we implement SPI Master Send Function:

void spi_master_send(uint8_t *data, int len) {

for (int i = 0; i < len; i++) {

SPI3.data_buf[i] = data[i]; // Load data into SPI buffer

}

Configures the data length for transmission, starts the SPI transaction, and waits for it to complete. The CS (chip select) line is toggled to signal the start and end of the transmission. Then Load the data to be transmitted into the SPI3 buffer.

SPI3.mosi_dlen.usr_mosi_dbitlen = len * 8 - 1; // Set data length (in bits)

gpio_set_level(VSPI_CS, 0); // Assert CS (active low)

SPI3.cmd.usr = 1; // Start SPI transaction

while (SPI3.cmd.usr); // Wait until transmission completes

gpio_set_level(VSPI_CS, 1); // Deassert CS

}

4.LED

4.1 WS2812 Working Principle

The LED Strip has three ports: Vdd, GND and Signal Pin.

Individually addressable LED strips operate using a digital data protocol. The Atmega328Pb sends a signal that represents 1s and 0s through the data pin. The LEDs interpret this signal to set their colors and brightness.

For WS2812 LEDs (Common Protocol): The microcontroller sends data in the form of pulse-width modulation (PWM). The duration of the pulse determines whether it’s a 1 or 0.

Figure 9: The Protocol In WS2812 LEDs

- 0 Signal: A short high pulse followed by a longer low pulse. Example: High for 0.35 μs, then Low for 0.8 μs.

- 1 Signal: A longer high pulse followed by a shorter low pulse. Example: High for 0.7 μs, then Low for 0.6 μs. The WS2812 LEDs decode these timing differences to differentiate between 0 and 1.

Protocol Timing: The WS2812 operates at a frequency of 800 kHz. Each LED receives a 24-bit data frame (8 bits each for Red, Green, and Blue channels).

4.2 Implementation in Atmega328Pb using Bare C

In our Atmega328Pb, we write bare metal C to give signal data. The core function is ‘ws2812b_send_bit’, which is designed to send a single bit (0 or 1) to a WS2812B LED strip by generating a pulse-width-modulated (PWM) signal with precise timing. It uses inline assembly to directly manipulate the microcontroller’s hardware registers for accurate timing control.

Sending a 1 Bit

if (bit != 0)

{

asm volatile (

"sbi %[port], %[pb] \n\t" // Set the LED pin high

"nop \n\t" // Delay to achieve ~0.8 μs

"nop \n\t"

"nop \n\t"

...

"cbi %[port], %[pb] \n\t" // Set the LED pin low

"nop \n\t" // Delay for the remaining ~0.45 μs

"nop \n\t"

...

::

[port] "I" (_SFR_IO_ADDR(LED_PORT)), // I/O port for LED

[pb] "I" (LED_PIN) // Pin connected to LED

);

}

- sbi (Set Bit in I/O Register):

- Sets the LED pin high (LED_PIN) on the specified port (LED_PORT).

- This starts the pulse.

- nop (No Operation):

- Inserts a small delay. Each nop takes 1 clock cycle.

- A sequence of nops creates a delay to ensure the high signal duration is ~0.8 μs.

- cbi (Clear Bit in I/O Register):

- Sets the LED pin low (LED_PIN).

Ends the pulse after the high signal duration.

- More nops:

- Adds additional delay to ensure the low signal duration is ~0.45 μs.

- Assembly Arguments:

-

Sending a 0 Bit

else { asm volatile ( "sbi %[port], %[pb] \n\t" // Set the LED pin high "nop \n\t" // Delay to achieve ~0.4 μs "nop \n\t" ... "cbi %[port], %[pb] \n\t" // Set the LED pin low "nop \n\t" // Delay for the remaining ~0.85 μs ... :: [port] "I" (_SFR_IO_ADDR(LED_PORT)), // I/O port for LED [pb] "I" (LED_PIN) // Pin connected to LED ); }- sbi:

- Sets the LED pin high, starting the pulse.

- nops for ~0.4 μs:

- Creates a shorter high signal duration compared to a 1 bit.

- cbi:

- Sets the LED pin low to end the high signal.

- nops for ~0.85 μs:

- Ensures the low signal duration is longer compared to a 1 bit.

It is conntrolled by command: 2

else if (data == 2) // toggle light

{

light_status ^= 1;

if (light_status)

{

ws2812b_send(255, 255, 255);

ws2812b_send(255, 255, 255);

ws2812b_send(255, 255, 255);

}

else

{

ws2812b_send(0, 0, 0);

ws2812b_send(0, 0, 0);

ws2812b_send(0, 0, 0);

}

}

5.Pump

The water pump is controlled by a relay, powered by a 5-24V supply. The Atmega328 microcontroller uses the PC0 pin to control the relay, which in turn switches the pump on and off. The connection diagram is shown below:

Figure 10: The Configuration of Pump

The pump is controlled by command: 3

else if (data == 3) // toggle pump

{

pump_status ^= 1;

if (pump_status)

{

PUMP_PORT |= (1 << PUMP_PIN);

}

else

{

PUMP_PORT &= ~(1 << PUMP_PIN);

}

6.Motor (Door)

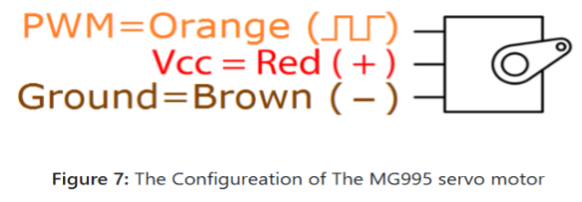

Generally, we use PWM to control the motor to enable it to rotate 90 degrees. There are three ports in the motor: PWM, Vdd and GND. The motor is powered by 5V. And the PWM singnal is controlled by the ATmega328Pb through PB1, its connection configuration is shown below:

Figure 11: The Configuration of the Motor

To controll the PWM singals, we coded the ATmega328Pb. There are two main functions:

. motor_initialize(int angle): Sets up Timer 1 in Fast PWM mode with a frequency of 50 Hz and an initial angle for the motor.

MOTOR_DDR |= (1 << MOTOR_PIN);

TCCR1A |= (1 << COM1A1) | (1 << WGM11);

TCCR1B |= (1 << WGM13) | (1 << CS11);

This function initializes the motor’s control settings using Timer 1 in Fast PWM mode and Configures the motor control pin (MOTOR_PIN) as an output by setting the corresponding bit in the data direction register (MOTOR_DDR):

- COM1A1: Configures the timer’s compare match behavior to output the PWM signal on OCR1A (non-inverting mode).

- WGM11: Part of the Waveform Generation Mode settings to enable Fast PWM mode.

- WGM13: Completes the configuration for Fast PWM mode using Timer 1.

- CS11: Sets the clock prescaler to 8, which determines the timer’s counting speed.

ICR1 = 20000;It sets the top value for the timer counter to 20000. This defines the PWM period. Assuming an 8 MHz clock and a prescaler of 8, this corresponds to a 50 Hz PWM frequency (commonly used for servo motors).

OCR1A = angle;This part sets the initial duty cycle for the PWM signal on the OCR1A pin. The value corresponds to the desired initial angle of the motor.

.motor_angle(int angle): Converts a desired angle into a PWM pulse width and updates the timer’s compare register (OCR1A) to adjust the motor’s position.

This function adjusts the motor’s angle by setting the appropriate PWM duty cycle

int output = angle / 90 * 1000 + 500;

- Converts the angle (in degrees) to a PWM pulse width in microseconds:

- For 0°, the pulse width is 500 µs.

- For 90°, the pulse width is 1500 µs.

- For 180°, the pulse width is 2500 µs.

OCR1A = output;

This updates the OCR1A register to change the PWM duty cycle and adjust the motor angle accordingly.

It is controlled by command: 1

if (data == 1) // toggle door

{

door_status ^= 1;

if (door_status)

{

motor_angle(90);

}

else

{

motor_angle(0);

}

}

Wand Diagram

This diagram illustrates the integration of a Nano 33 microcontroller, an LED strip, and a battery pack in the wand basic circuit. The Nano 33 is powered by a 4.5–4.8V battery pack while sharing a common ground with the entire circuit. The LED strip, also powered by the same battery pack, receives control signals from the Nano 33 via the PB6 pin, enabling dynamic operation such as color and brightness adjustments. The setup emphasizes efficient power distribution and signal synchronization, with a shared ground ensuring proper circuit functionality.

Figure 7: The Pin Layout Of The Wand

To ensure consistent data reading from the gyro and IMU, we added interrupts to the Nano 33. This allows us to read data every 100ms, avoiding irregular and inconsistent readings caused by varying processing times of the data matrix. This improvement helps maintain the accuracy of gesture recognition and ensures the standardization of the data.

1.Home Model 3D Printing

The walls of our smart furniture model are created using laser cutting. The core part is designed to accommodate a servo motor, which controls the door’s opening and closing. We modeled it to ensure the servo motor can be properly installed.

Figure 8: The 3D Module of The Wand

My wand requires a wide-voltage power supply using three batteries along with a buck converter to provide stable power for the Nano 33. The Nano 33 connects to an LED strip to provide feedback for different gestures, offering users a good interactive experience. Therefore, we designed a dedicated model for it. The model includes a centrally positioned battery compartment with a knob-style latch for easy battery installation and replacement, ensuring continuous usage. Additionally, we designed a groove for the LED strip to enhance the wand’s aesthetic appeal.

2.Light Strip Control In Nano33

The LED Strip has three ports: Vdd, GND and Signal Pin. The connection configuration is shown before in Fig 7.

Individually addressable LED strips operate using a digital data protocol. A microcontroller (like Nano 33) sends a signal that represents 1s and 0s through the data pin. The LEDs interpret this signal to set their colors and brightness.

-

For a 1 bit: The signal stays high for approximately 0.8 μs. Then it goes low for approximately 0.45 μs.

-

For a 0 bit: The signal stays high for approximately 0.4 μs. Then it goes low for approximately 0.85 μs.

In our design, we write a function to create a “wave-like” animation effect on an LED strip controlled by the FastLED library. It will sequentially light up LEDs in a “wave” pattern along the strip. Only one LED is actively lit at any given time, while the previously lit LED is turned off. The animation stops when it completes one full pass along the length of the strip. The core codes are shown below:

- Step 1: Check if the Wave Animation is Enabled

if (!LED_flow_enable) return; - Step 2: Check if the Animation is Complete

if (LED_flow_counter >= LED_NUM)- Turn Off the Last LED:

leds[LED_NUM - 1] = CRGB::Black; The last LED in the strip is turned off by setting its color to CRGB::Black. - Update the LED Strip:

FastLED.show(); The FastLED.show() function sends the updated LED data to the strip to reflect the changes. - Reset the Animation:

LED_flow_enable = 0; LED_flow_counter = 0;

- Turn Off the Last LED:

- Step 3: Continue the Animation if Not Complete

elseIf the wave animation is not complete (LED_flow_counter < LED_NUM), the function lights up the next LED in the sequence and turns off the previous one:

- Turn Off the Previous LED:

leds[max(LED_flow_counter - 1, 0)] = CRGB::Black;The LED at the position LED_flow_counter - 1 (the previous LED) is turned off.

- Turn Off the Previous LED:

The max() function ensures the index does not go below 0 (to avoid accessing out-of-bounds memory).

- Light Up the Current LED:

leds[LED_flow_counter] = LED_flow_color;The LED at the position LED_flow_counter (the current LED) is set to the specified wave color (LED_flow_color).

- Update the LED Strip:

FastLED.show();

Sends the updated data to the LED strip to reflect the changes (turning off the previous LED and lighting up the current one).

- Increment the Counter:

++LED_flow_counter;

The counter is incremented to move to the next LED for the subsequent call of this function.

On every call to show_wave(), this function checks if the wave animation is enabled and either:

- Completes and resets the animation if the last LED has been reached.

- Lights up the current LED, turns off the previous one, and moves to the next LED if the animation is still ongoing.

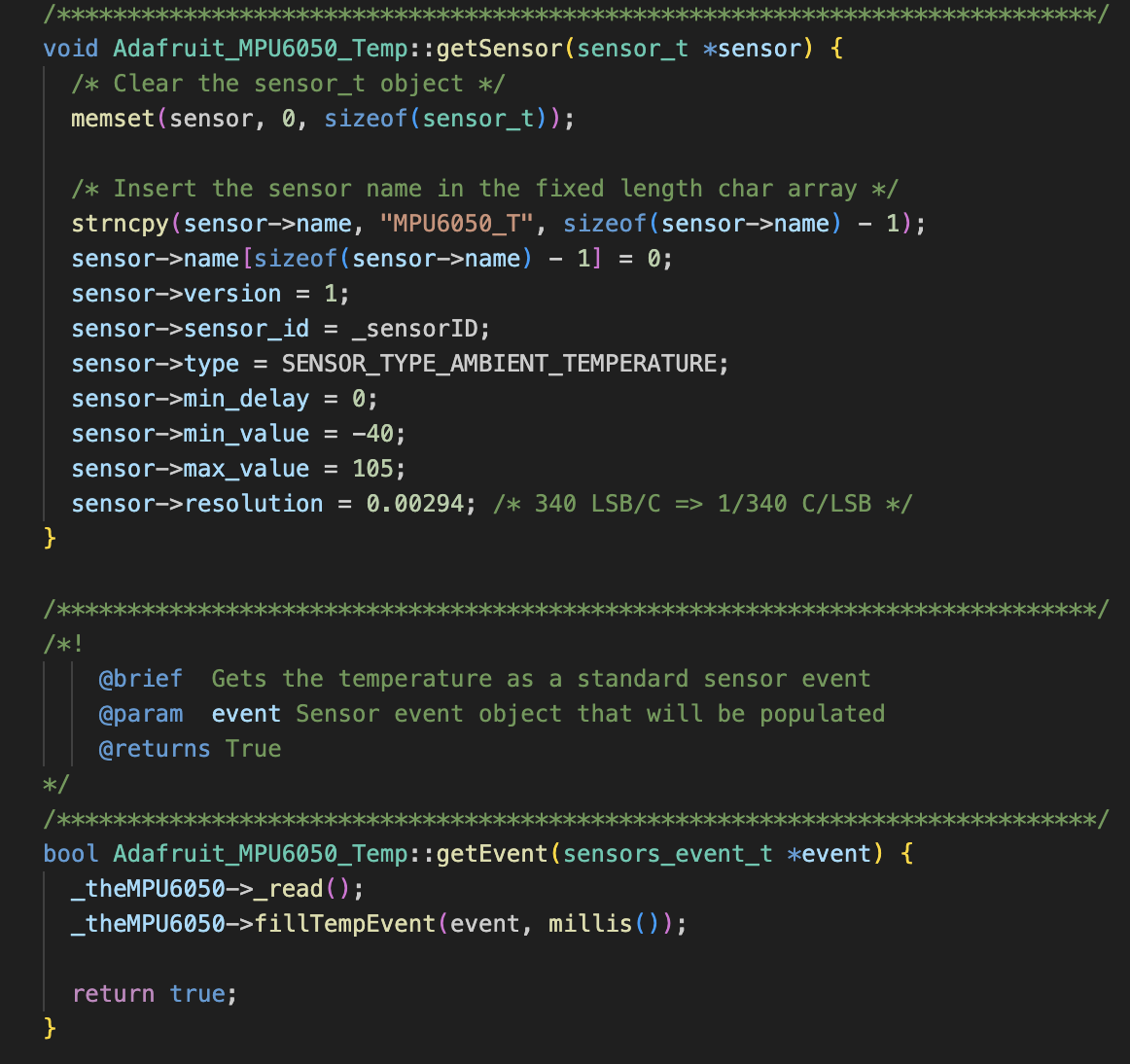

3.IMU & Gyroscope data reading and processing

Basically, the whole reading process is shown below:

Figure 8: The Data Processing Flow Chart

To ensure consistent data reading from the gyro and IMU, we added interrupts to the Nano 33. This allows us to read data every 100ms, avoiding irregular and inconsistent readings caused by varying processing times of the data matrix. This improvement helps maintain the accuracy of gesture recognition and ensures the standardization of the data.

The interrupt function is:

extern "C" void TC3_Handler()

{

// Check if the match interrupt flag is set

if (TC3->COUNT16.INTFLAG.bit.MC0)

{

// Clear the interrupt flag

TC3->COUNT16.INTFLAG.reg = TC_INTFLAG_MC0;

show_wave();

}

}

These codes are to implement Timer3 in the Nano33 for trigerring interupt. We aim to read data every 100ms while not stop the data processing.

void configure_timer()

{

GCLK->CLKCTRL.reg = GCLK_CLKCTRL_ID_TCC2_TC3_Val; // select TC3 peripheral channel

GCLK->CLKCTRL.reg |= GCLK_CLKCTRL_GEN_GCLK0; // select source GCLK_GEN[0]

GCLK->CLKCTRL.bit.CLKEN = 1; // enable TC3 generic clock

// Configure synchronous bus clock

PM->APBCSEL.bit.APBCDIV = 0; // no prescaler

PM->APBCMASK.bit.TC3_ = 1; // enable TC3 interface

// Configure Count Mode (16-bit)

TC3->COUNT16.CTRLA.bit.MODE = 0x0;

// Configure Prescaler for divide by 2 (500kHz clock to COUNT)

TC3->COUNT16.CTRLA.reg |= TC_CTRLA_PRESCALER_DIV1024;;

// Configure TC3 Compare Mode for compare channel 0

TC3->COUNT16.CTRLA.bit.WAVEGEN = 0x1; // "Match Frequency" operation

// Initialize compare value for 100mS @ 500kHz

TC3->COUNT16.CC[0].reg = 4687;

// Enable TC3 compare mode interrupt generation

TC3->COUNT16.INTENSET.bit.MC0 = 0x1; // Enable match interrupts on compare channel 0

NVIC_EnableIRQ(TC3_IRQn); // Enable TC3 interrupt in the NVIC

// Enable TC3

TC3->COUNT16.CTRLA.bit.ENABLE = 1;

// Wait until TC3 is enabled

while(TC3->COUNT16.STATUS.bit.SYNCBUSY == 1);

NVIC_SetPriority(TC3_IRQn, 3);

NVIC_EnableIRQ(TC3_IRQn);

__enable_irq();

}

After reading the data accelerometer and gyroscope, we assign an index_counter to the data starting from 0 and accumulate for each instance of data by 1 to allow the calculation process to monitor the continuity of the input data, in the form of

[index, gyroX, gyroY, gyroZ, accX, accY, accZ]

We use ISR to push the data onto a queue to make sure the input data to form a continuous sequence of serial data list.

Below is the ISR handler logic we use to update data on the queue. We have a semaphore to avoid race conditions for checking the queue availability during insertion, popping and checking the queue sizes. When the queue modification is available and the queue is not Full, we simply push the data onto the queue. Otherwise if the queue is Full, we pop the first element from the queue. If we have a missing element (the gyroscope data is not continuous) then it indicates we have a missing element when collecting data, in this case we remove all elements from the queue to restart collecting data for calculation.

if (xSemaphoreTake(QUEUESemaphore, ENQUEUE_MAXWAIT) == pdTRUE) {

Serial.println("inserting sensory data %d", sensory_data.idx);

int queue_end_idx = GyroQueue.queue_end_idx;

if (isFull(GyroQueue)) {

dequeue(GyroQueue, &PriorSensoryData);

}

if (queue_end_idx + 1 != gyro_idx) {

clear_queue(GyroQueue);

}

enqueue(GyroQueue, &sensory_data);

Structure of the Queue

Figure 9: Diagram and Mechanism of the cyclic queue

The sensory data is stored in a cyclic queue with a maximum capacity of N elements. The purpose of the queue is to allow O(1) insertion and deletion of sensory data. Moreover, a queue allows two concurrent threads to add and remove element from the queue without causing signiticant race conditions that’ll corrupt the memory. The queue is a datastructure made up of a buffer as a continuous chunk of memory, am index counter that indicate the index of the first element of the queue in the memory array, and the index counter that indicate the block after the last element of the queue in the array

typedef struct {

SensoryData_t data[BUFFERSIZE]; // Array to store data

int front; // Index of the front element

int rear; // Index of the rear element

int count; // Number of elements in the queue

int queue_start_idx;

int queue_end_idx;

} GyroQueue_t;

The storage queue is a chunk of memory of $N \times sizeof(SensoryData)$ . We use the start index and the end index to store the start and end element of the queue. Each time a new element is inserted, we update the end of the queue as $(endIndex + 1) \pmod N$. If the queue is Full, we pop the least recently inserted element from the queue by updating the start index to $(startIndex + 1) \pmod N$. Below are the implementation of insertion and deleting element (the 6 axis gyroscope data) into and from the queue after checking if the queue is Empty or Full

int enqueue(GyroQueue_t* queue, SensoryData_t* input_data) {

if (isFull(queue)) {

return 0; // Queue is full

}

queue -> data[queue -> rear] = *input_data; // Add element to the rear

queue -> rear = (queue -> rear + 1) % BUFFERSIZE; // Update rear (wrap around)

queue -> count++; // Increase element count

queue -> queue_end_idx = input_data -> idx;

return 1;

}

// Dequeue an element

int dequeue(GyroQueue_t* queue, SensoryData_t* output_data) {

if (isEmpty(queue)) {

return 0; // Queue is empty

}

SensoryData_t output_data_tmp = queue->data[queue -> front]; // Get the front element

output_data -> idx = output_data_tmp.idx;

output_data -> gyro_Angular_X = output_data_tmp.gyro_Angular_X;

output_data -> gyro_Angular_Y = output_data_tmp.gyro_Angular_Y;

output_data -> gyro_Angular_Z = output_data_tmp.gyro_Angular_Z;

output_data -> gyro_X = output_data_tmp.gyro_X;

output_data -> gyro_Y = output_data_tmp.gyro_Y;

output_data -> gyro_Z = output_data_tmp.gyro_Z;

queue -> front = (queue -> front + 1) % BUFFERSIZE; // Update front (wrap around)

queue -> count--; // Decrease element count

if (queue -> count > 0) {

queue -> queue_start_idx = queue->data[queue->front].idx;

}

return 1;

}

In the main calculation loop, we keep popping elements from the queue and compare with the previous element that we popped. We use a flag Recog initialized to be 0 to indicate whether we’re collecting data for pose recognition. If $Recog == 1$, we place the new data into a buffer that we use for performing pose recognition. If the new data is not continuous in index

$(IndexNew \neq Indexold + 1) $ with the previous data, we clear the buffer and set the flag Recog to be 0

If the new data has an index that’s continuous with the previous data, we consider the below 3 cases:

- If we’re currently not in Recog mode and the new data is similar to the previous data, we think of the wand in a stable state as the user is not waiving it for pose recognition. In this case, we simply update the previous data and don’t do anything

- If we’re currently not in Recog mode and the new data is vastly different from the previous data, we enter the Recog mode by setting

Recog=1, STABLETIMEGAP =0 - If we’re currently in Recog mode, we add the new data to a buffer for vectorized pose recognition.

- If the new data is similar to the previous data, we increment the stable time counter by 1

(STABLETIMEGAP++) - If the wand sensors are stable for a long period of time

(STABLETIMEGAP > Threshold), we think the user has finished waving the wand and setRecog = 0

- If the new data is similar to the previous data, we increment the stable time counter by 1

Pose Recognition Algorithm

After we enter and quit the Recog by setting the flag to be 1 and then back to 0, we’ve stored the time sequence data into an array for pose recognition. We currently conduct pose recognition using a version of thresholding according to the sharpe-turns recognized in the series data. This method is shown to be quite effectively and more importantly, deployable to be performed in realtime on the edge compared to Deep Learning algorithms (which are not practical to be implemented on such a lightweight platform given the amount of effort we’ll need)

Specifically, we measures the number of sharp turns as fingerprints to classify the pose recognition into different types of commands. Consider the two neighboring data D1, D2 where D2.idx=D1.idx + 1, we think of they have a sharp turn if

Based on the number of sharp turns found in the buffer, we could classify the waving action into drawing different shapes in the air. Below are samples of recognizing triangles and quadrilaterals

Figure 10: Example of Pose Recognition

The implementation of the above formula is illustrated below

static int compute_angular_Change(SensoryData_t* prior_data, SensoryData_t* current_data) {

int d_angular_x = current_data -> gyro_Angular_X - prior_data -> gyro_Angular_X;

int d_angular_y = current_data -> gyro_Angular_Y - prior_data -> gyro_Angular_Y;

int d_angular_z = current_data -> gyro_Angular_Z - prior_data -> gyro_Angular_Z;

int angular_shift = sqrt(d_angular_x * d_angular_x + d_angular_y * d_angular_y + d_angular_z * d_angular_z);

return angular_shift;

}

To further improve the throughput of calculation, we improved the procedure of taking elements from the queue and performing classification into one function, where we constantly keep track of the previously popped element from the queue and compare it with the new element for angular shift. If we’re currently in counting Recog==1 mode, we increment the number of sharp turns by 1.

static int execute_calculations(SensoryData_t* current_data, SensoryData_t* prior_data) {

if (!dequeue(GyroQueue, current_data)) {

// the queue is currently empty

return -1;

} else {

//the queue is not empty

int result = -1;

int prior_idx = prior_data -> idx;

int current_idx = current_idx -> idx;

if (current_idx != prior_idx + 1) {

// discontinuous cnt caused by package loss, replace the PriorSensory data with new data

copy_sensory_data(current_data, prior_data);

if (TRACKING) {

STABLETIMEGAP += current_idx - prior_idx;

}

} else {

// the data is purely continuous

int angular_shift = compute_angular_Change(prior_data, current_data);

if (angular_shift > ANGULAR_THREHSOLD) {

if (TRACKING) {

STABLETIMEGAP = 0;

SHARPTURNCOUNTER += 1;

} else {

TRACKING = 1;

STABLETIMEGAP = 0;

SHARPTURNCOUNTER = 0;

}

}

copy_sensory_data(current_data, prior_data);

}

if (STABLETIMEGAP > GAP_TILL_EXIT) {

result = SHARPTURNCOUNTER;

//reset the time gap to be zero to allow restarting

STABLETIMEGAP = 0;

return result;

}

}

}

This will ensure a high throughput of recognition to be much smaller than the gap of sampling data from IMU, which avoid data stagnation.

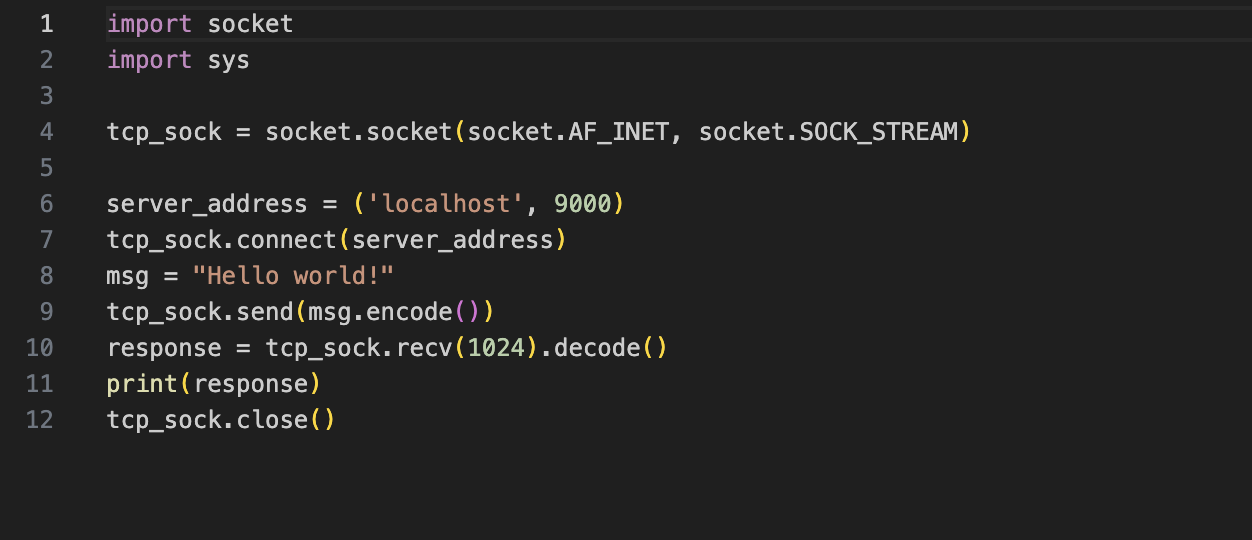

Wireless communication

Simply put, we use an ESP32 and Nano 33, both connected to an ATmega, for wireless communication, as they are equipped with Wi-Fi modules. The Nano 33 recognizes hand gesture commands and transmits them to the ESP32 via Wi-Fi. The ESP32 then sends the commands to the ATmega328Pb through SPI.

We set up a private network, configuring the ESP32 and Nano 33 to be on the same local network. Each device is assigned its own IP address, which we define in the code for both transmitting and receiving functionalities. This setup ensures continuous communication between the devices.

ESP32(Reciever)

- Declaring Wi-Fi Credentials

The code defines the SSID and password of the Wi-Fi network as constants:

const char* ssid = "R9000P"; const char* password = "12348765";These variables store the network name (ssid) and its corresponding password (password) required to connect to the Wi-Fi.

- Setting Up a Wi-Fi Server

A Wi-Fi server is initialized to listen on a specific port (1234 in this case):

const uint16_t port = 1234; // Port to listen on WiFiServer server(port);The WiFiServer object, server, will handle incoming client connections on the defined port.

- Wi-Fi Connection

The connectToWiFi function establishes the connection to the specified Wi-Fi network:

void connectToWiFi() { WiFi.begin(ssid, password); // Start connecting to the Wi-Fi network while (WiFi.status() != WL_CONNECTED) // Wait until the connection is established { delay(1000); // Retry every second until connected } }WiFi.begin(ssid, password) initiates the connection to the Wi-Fi network using the provided credentials. The while loop ensures the program waits until the device is connected to the network by continuously checking the connection status (WiFi.status() == WL_CONNECTED).

- Starting the Wi-Fi Server

In the setup function, after the Wi-Fi connection is established, the server begins listening for client connections:

server.begin(); - Handling Client Connections

In the loop function, the server checks for incoming client connections using server.available():

WiFiClient client = server.available(); if (client) { // Wait for and process client data }If a client connects, the server enters a loop to listen for data sent by the client. Once the communication ends, the client connection is stopped using client.stop().

Nano33(Send)

Similarly, we first need to declaring Wi-Fi Credentials as we did before. The code is same. The core parts are:

- Server Configuration The script defines two servers with corresponding IP addresses and ports: ```c const char server_esp32[] = “192.168.137.156”; const uint16_t port_esp32 = 1234;

const char server_py[] = “192.168.137.218”; const uint16_t port_py = 1234;

server_esp32 and server_py represent the server addresses for communication with the ESP32 and a Python server, respectively.

port_esp32 and port_py specify the ports to be used for the connections.

2. Connecting to Servers

The connectToServer function handles the connection to a specified server:

```c

bool connectToServer(WiFiClient* client, const char * server, const uint16_t port)

{

if (DEBUG)

{

Serial.print("Connecting to server ");

Serial.print(server);

Serial.print(":");

Serial.println(port);

}

if ((*client).connect(server, port)) // Attempt connection

{

if (DEBUG)

Serial.println("Connected to server!");

return true; // Connection successful

}

else

{

if (DEBUG)

Serial.println("Connection to server failed.");

return false; // Connection failed

}

}

Uses a WiFiClient object to establish the connection. Checks and logs the connection status for debugging.

- Sending Messages

The sendMessage function sends data to the connected server:

void sendMessage(WiFiClient* client, const char* message) { if ((*client).connected()) { if (DEBUG) { Serial.print("Sending: "); Serial.println(message); } (*client).println(message); // Send the message } else { if (DEBUG) Serial.println("Client not connected. Cannot send message."); } }Verifies that the client is connected before sending a message using println.

Hardware requirement Specification

-

HRS01: The motor can successfully turn on and off the designed door Validation:

-

HRS02: The LED lights on the wand can respond to different commands with various effects, achieving flowing light effects instead of just functioning as a basic on/off LED. Validation:

Software requirement Specification

- SRS01:Achieve at least 80% gesture accuracy, minimizing false positives/negatives. Importance: Ensures that users can reliably control home devices without frustration or repeated attempts.

- Test Method:

We waved 100 times for different postures and records their recognized results. The graph below shows the simulation results:

Figure 10: The Simulation Results

The Summary Table:

| Posture | Total Test | Accuracy | Misrecognize Gesture 1 | Misrecognize Gesture 2 | Misrecognize Gesture 3 |

|---|---|---|---|---|---|

| 1 | 100 | 90% | 0 | 5 | 5 |

| 2 | 100 | 89% | 7 | 0 | 4 |

| 3 | 100 | 95% | 3 | 2 | 0 |

The Test result shows that we perfectly meet our exception.

-

SRS02: Achieve a response time under 200 ms, allowing users to feel immediate feedback from devices. Validation:

-

SRS03: Successfully achieve wireless communication Validation:

Summary

We successfully completed all our goals and designs on time. From wireless communication to gesture recognition and data processing, we met the requirements and objectives we initially set. However, there are still areas for improvement:

-

LED Strip Effects: The LED strip effects we implemented using bare C are quite limited. In the future, we can explore adding more effects and flashing patterns to more realistically simulate the spell-casting process.

-

Gesture Recognition: We opted against using ML-based methods due to limited on-device computational power. Instead, setting thresholds allowed us to achieve the desired performance. For more complex gestures, we could consider transmitting data to a computer via Wi-Fi for processing and then sending it back. However, this would increase both development time and response latency. As a result, the threshold-based method remains the most optimal solution for now.

-

Smart Furniture Models: We can enhance the furniture model by integrating more smart devices to expand functionality.

These improvements would make the system more robust and feature-rich.

Sprint review 2

Current state of project

The team has made substantial progress on several critical tasks this week, continuing to move closer to achieving our project goals. Key developments include successful communication with sensors, early stages of wireless module integration, and preparations for component integration testing. Our focus is on refining individual components, with a special emphasis on ensuring compatibility across the different hardware modules. We are on track to integrate these modules in the upcoming sprint

Last week’s progress

Current State Overview

This week, the team has made excellent progress, achieving several important milestones. Notably, we have successfully tested and integrated key hardware communication methods, including wireless communication, sensor data reading, and LED control. As we prepare for component integration, the next focus will be on comprehensive testing and ensuring the modules work cohesively. We are on schedule to advance to full system integration in the next sprint.

Nano 33 IMU & Gyroscope Check

- Description: This task involved figuring out how to read data from the onboard IMU & Gyroscope of the Nano 33 device.

- Status: Completed

- Outcome: The team successfully figured out the communication method with the IMU & Gyroscope. Using the bare-metal code approach, the device now * correctly outputs data from the IMU & Gyroscope. A fallback method using the appropriate libraries was also considered, but was not needed since * the communication was established. We have some successful data output under ./data/nano33_gyroscope_IMU_data.

- Time Spent: 6 hours

Gyroscope Reading

Investigation of ESP32 on Atmega328

- Description: The goal was to familiarize ourselves with the ESP32 module, with an eye toward establishing wireless communication.

- Status: Completed

- Outcome: The team successfully investigated communication protocols like SPI and UART. The ESP32 now communicates with the Atmega328pb for data transmission, and the best protocol for communication with the Nano 33 was identified.

- Time Spent: 3 hours

LED Light Strip Configuration

- Description: This task aimed to write code for controlling an LED light strip.

- Status: Completed

- Outcome: The team successfully wrote bare-metal C code to control the LED light strip, allowing for changes in color and brightness. The LED strip now functions as expected, which will be useful for providing visual feedback during testing and future project phases. The code has been written into library under ./328pb/lib/ws2812b_control.

- Time Spent: 5 hours

Develop Testing Plan for Integration

- Description: This task focused on creating a testing plan for integrating various components of the project, especially posture recognition and Bluetooth modules.

- Status: Completed

- Outcome: The testing plan was completed, outlining key test cases and success criteria for each integration point. The plan includes evaluating posture recognition accuracy, Bluetooth communication stability, and power management efficiency during integration. This will be critical for the next steps of the project as we begin integrating the components.

- Time Spent: 3 hours

Wireless communication is achieved

- Description: Successfully send the data from nano33 to another device connected to the same Wifi, testing the functionality of the wireless communication. Send and receive are achieved

- Status: Completed

- Outcome: Wireless communication between the Nano 33 and the connected device was successfully established. The team was able to send and receive data, validating the functionality of the communication link. This achievement sets the foundation for future wireless interactions in the project.

- Time Spent: 6 hours

Next week’s plan

Task: Construct dataset for gyroscope classification

- Description: Record and label dataset from readings of gyroscope when drawing different gestures, including but not limited to squares, circles, triangles, etc

- Estimated Time: 5 hours

- Assigned to: Yudong Liu

- Definition of Done: Record at least 20k lines of data, such that each line of data corresponds to a set of 3-axis gyroscope sampled data.

- Detailed Description: We connect nano33 to the serial port and write to a log file while we waive the wand. To figure out the start and end of the gesture during the recording process, we use a program to insert a breakpoint to the logfile through pressing a key at the start and end of each gesture. We use a continuous time-series 2-3 seconds of data for each instance of gesture.

Task: Investigate the naive algorithm of classifying simple shapes such as circles, squares, triangles, etc as gestures

- Description: Write and test an algorithm that uses a thresholding method for pos recognition

- Estimated Time: 2 hours

- Assigned to: Yudong Liu

- Definition of Done: Being capable of distinguishing square, circle and triangular shapes through thresholding on the time-series data of the gyroscope

- Detailed Description: Write a function that takes the sequence of 3 axis data as input, and accumulates over a period of time (maximal 3 seconds in the past) to identify possible signs of different postures (number of sharp turns or a periodic circular movement for example).

Task: investigate the machine learning based algorithm for pos-recognition

- Description: write and test a model (such as KNN) for mapping poses to a embedding vector

- Estimated Time: 5 hours

- Assigned to: Yudong Liu

- Definition of Done: Write code to perform mapping of time-series pose data to a vector after denoising and report the accuracies of random pose generation

- Detailed Description: When testing, connect the wand to a desktop through either wifi or bluetooth, send the raw data (3 axis gyroscope data) series to the desktop for matrix calculation after concatenation.

Task: Test control mechanisms of the wand

- Description: Decide and store different ground truth gestures on the LEDs and motors (the smart home setting) to operate based on instructions of the wand

- Estimated Time: 6 hours

- Assigned to: Guanlin & Jiajun

- Definition of Done: If the wand sends out the correct instructions, the devices in the smart home could operate as planned

- Detailed Description: Set up the hardware and connect them to ATMega and ESP32. Write functions for controlling different devices.

Task: Integration of the wand model and the home model

- Description: Put together the printed parts and laser-cutting parts and form a workable model

- Estimated Time: 10 hours

- Assigned to: Guanlin Li & Jiajun Chen & Yudong Liu

- Definition of Done: model should work as written in the expectation of this project.

- Detailed Description: Integrate different parts together, install the motor, light and pump to the home model, install the led strip, nano33 and battery onto the wand.

Sprint review #1

Current state of project

This week, we completed the draft design for the home model, which was showcased successfully. However, due to the unavailability of actual components, we could not measure dimensions and therefore have not yet designed the wand model. We secured access to the RPL lab and are currently evaluating options to either 3D print the home model or use laser cutting to assemble the basic shell as soon as possible. Additionally, we studied the Nano 33 datasheet to understand Bluetooth communication and the IMU module, ensuring that future communication between wands can be implemented effectively. Current Status

The project is making steady progress with key foundational tasks completed and a clear roadmap for upcoming activities. The home model design has been finalized, and we are preparing for its physical prototyping through either 3D printing or laser cutting. Access to the RPL lab has been obtained, facilitating the next steps for hardware assembly. While the wand model design is pending due to the absence of necessary components for measurement, we have advanced in understanding the technical aspects of the Nano 33, including Bluetooth communication and the IMU module. Additionally, we tested the basic communication capabilities of the Nano 33 by successfully controlling an LED light via remote commands. This lays a solid groundwork for implementing inter-wand communication in the future. The focus now is on transitioning from design to prototyping and integrating hardware and communication systems.

Last week’s progress

Completed the Planned Tasks of the last week

- Finalize and order remaining components, ensure all required parts are in stock and accounted for.

- Document all decisions, discussions, and tasks completed to date in a mini-report.

- Create a testing plan for integrating components once all are available, especially focusing on posture recognition and Bluetooth modules.

- Identified new items to be added to our procurement list.

Still In Progress:

- Initial Integration of Nano 33 MCU

- Begin setting up the Nano 33 MCU for posture recognition, test Bluetooth communication and gyroscope integration.

We are still working on the posture recognition Test and reading the data sheet of the Nano 33. We are planning to fix basic posture recognition next week and make a demo trial test on Nano 33.

Extra Work:

1. Home Model Design and Get the Access to 3D printer

- Finished the basic construction of the home model in SolidWork

- Getting the access to RPL Lab and the Venture Lab for printing our model in the future

Proof of Work:

- GitHub Commits: Update Model File

- Proof of Access to the RPL Lab and the Venture Lab

2.Nano 33 Data Sheet Reading and Basic Function Test

- Finished part of reading of the Bluetooth Communication using nano33

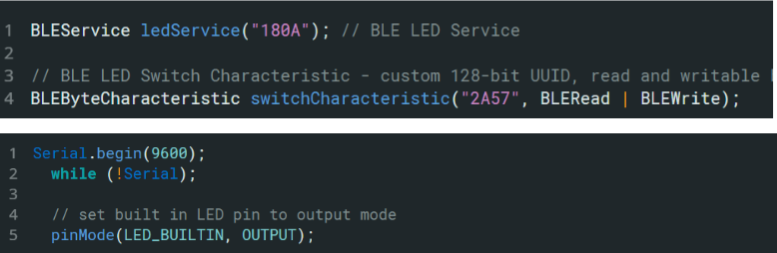

- Tested the wireless control ability via simple demo: turning on and off LED.

Proof of Work:

- The followings are part of the demo code:

Test Existing implementation for gyroscope data reading

Serve Motor Test

- Tested the functionality of the motor based on the datasheet

- It successfully works using ATm328P to control.

Proof of Work:

- GitHub Commits: Update Model File

Our motor can function properly.

Our motor can function properly.

LED strip Test

Have tested the functionality of the LED strip.

Next week’s plan

Task: Nano 33 IMU&Gyroscope check

- Description: Figure out how to read data from IMU&Gyroscope from the onboard device of Nano 33.

- Estimated Time: 6 hours

- Assigned to: Guanlin & Jiajun & Yudong Liu

- Definition of Done: Nano 33 successfully prints out correct data from IMU Gyroscope.

- Detailed Description: Read through the implemented code and datasheet to try to figure out the way to communicate with the sensor using bare metal code. If failed, use the library & make sure every part of the library is understood.

Task: Investigation of ESP32 on Atmega328

- Description: Familiar with ESP32 module on Atmega328pb for further wireless communication.

- Estimated Time: 6 hours

- Assigned to: Jiajun & Li Guanlin

- Definition of Done: ESP32 can successfully communicate with Atmega328pb, including receiving and sending data

- Detailed Description: investigate different communication protocols including SPI and UART and find the best way to communicate with Nano33.

Task: LED light strip configuration

- Description: write a module to control the LED light strip

- Estimated Time: 5 hours

- Assigned to: Guanlin Li & Yudong Liu

- Definition of Done: A code that can control the color and brightness of the LED strip.

- Detailed Description: Implement the code using bare metal C and successfully control the LED light strip to display different colors.

Task: Develop Testing Plan for Integration

- Description: Create a testing plan for integrating components once all are available, especially focusing on posture recognition and Bluetooth modules.

- Estimated Time: 3 hours

- Assigned to: Liu Yudong

- Definition of Done: Testing plan is completed, including test cases and success criteria for integration of key modules. Detailed Description: Outline test cases, methods, and success criteria for each integration point, including posture recognition accuracy, Bluetooth stability, and power management.

Sprint review trial

Current state of project

We finalized the selection of necessary components, including in-depth discussions and considerations for specific details such as the power supply, MCUand IMU&Gyroscope module.

Additionally, we updated our project implementation approach and identified new items to be procured. For the posture recognition module, we optimized the use of the gyroscope and decided to incorporate a new MCU, the Nano 33, which combines Bluetooth communication and a gyroscope, allowing us to reduce costs. This decision was reached after extensive discussions within the team and the TA’s advise. We also clarified the allocation of responsibilities among team members, completed the project contract, strengthened team cohesion, and established our final objectives.

Last week’s progress

Component Selection

- Finalized the necessary components and discussed specific elements like power supply requirements.

- Updated our approach to implementing the project based on these component choices.

- Identified new items to be added to our procurement list.

Posture Recognition Module

- Selected a new MCU, the Nano 33, which integrates Bluetooth communication and a gyroscope, reducing costs. This decision was made after extensive team discussion.

Team Coordination and Planning

- Finalized the allocation of responsibilities among team members.

- Completed the project contract, reinforcing team cohesion and clearly defining our objectives.

Proof of Work:

- GitHub Commits: Update BOM for hardware of this project.

- Mini-reports: Discussions on power supply and component optimization in the project repository.

- Observations: Realized that using the Nano 33 would allow cost savings and simplify Bluetooth integration, based on team discussions and testing.

Hardware Status

- Most of the required components have been selected and submitted for purchase, including backups where necessary.

- Testing of currently available components has shown that they are working as expected.

Next week’s plan

Task: Ordering and Inventory Check

- Description: Finalize and order remaining components, ensure all required parts are in stock and accounted for.

- Estimated Time: 2 hours

- Assigned to: Jiajun Chen

- Definition of Done: All required components, including backups, have been ordered and an updated inventory list is created.

- Detailed Description: Confirm the purchase of additional components identified this week and update the inventory to reflect all items needed for the project.

Task: Initial Integration of Nano 33 MCU

- Description: Begin setting up the Nano 33 MCU for posture recognition, test Bluetooth communication and gyroscope integration.

- Estimated Time: 4 hours

- Assigned to: Liu Yudong & Li Guanlin

- Definition of Done: Nano 33 is successfully integrated with initial posture recognition tests and Bluetooth communication functioning.

- Detailed Description: Install and configure the Nano 33, set up Bluetooth communication, and perform initial tests to confirm it meets project requirements.

Task: Documentation of Current Progress

- Description: Document all decisions, discussions, and tasks completed to date in a mini-report.

- Estimated Time: 3 hours

- Assigned to: Jiajun Chen & Guanlin Li

- Definition of Done: A detailed report summarizing current project status, decisions made, and progress on each component and task.

- Detailed Description: Create a structured document that includes updates on component choices, hardware testing results, and team discussions on implementation strategy.

Task: Develop Testing Plan for Integration

- Description: Create a testing plan for integrating components once all are available, especially focusing on posture recognition and Bluetooth modules.

- Estimated Time: 2 hours

- Assigned to: Liu Yudong

- Definition of Done: Testing plan is completed, including test cases and success criteria for integration of key modules.

- Detailed Description: Outline test cases, methods, and success criteria for each integration point, including posture recognition accuracy, Bluetooth stability, and power management.

Final Project Proposal

1. Abstract

Home automation is increasingly transforming modern households with smart technology, enabling users to control and monitor their home environment conveniently. The goal of the team’s project is to design and construct an innovative home automation centered around an interactive smart wand, leveraging the strengths of teamwork and technical expertise. The project’s design scheme includes an integrated electronic system that supports a range of functions, allowing users to control household devices such as lights, doors, and pet water dispensers through customized wand gestures and commands wirelessly. The project is carried out by the team, each contributing to various aspects of the design, with the flexibility to choose hardware components within a specified budget.

2. Motivation